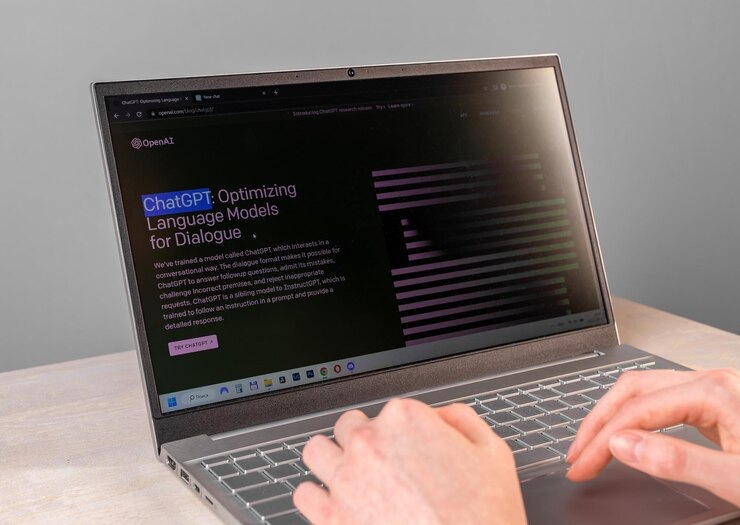

The shadows of the dark web are illuminating a troubling trend: the rise of cybercrime powered by ChatGPT, the powerful language model from OpenAI. A recent analysis by Kaspersky’s Digital Footprint Intelligence service identified nearly 3,000 posts on dark web forums discussing the illicit use of ChatGPT and other large language models (LLMs). This surge in activity paints a concerning picture of how cybercriminals are exploiting cutting-edge Artificial Intelligence (AI) for nefarious purposes.

The Scope of the Threat:

The dark web chatter reveals a diverse range of malicious applications for ChatGPT. Some key areas of concern include:

1. Malware Development:

Criminals are exploring ways to leverage ChatGPT’s code-generation capabilities to automate the creation of sophisticated malware, potentially bypassing traditional detection methods.

2. Phishing and Scams:

The model’s ability to craft natural-sounding text is being misused to create convincing phishing emails and social media scams, tricking unsuspecting users into revealing sensitive information or downloading malware.

3. Financial Fraud:

ChatGPT is being used to generate fake financial documents and manipulate market data, facilitating schemes like identity theft and investment scams.

4. Automated Bot Attacks:

Cybercriminals are discussing using ChatGPT to create AI-powered bots that can automate tasks like brute-force attacks and credential stuffing, increasing the efficiency and scale of their operations.

Beyond the Code:

The dark web discussions extend beyond technical applications. There’s evidence of a thriving market for stolen ChatGPT accounts, indicating that cybercriminals are actively seeking access to the model’s capabilities. Additionally, some forums showcase jailbroken versions of ChatGPT, modified to unlock restricted functionalities for malicious purposes.

Conclusion:

The rise of ChatGPT-related cybercrime highlights the urgent need for responsible development and deployment of AI technologies. While LLMs offer immense potential for good, their misuse can have far-reaching consequences. OpenAI and other developers must prioritize robust safeguards and security measures to mitigate the risks associated with these powerful tools. Additionally, cybersecurity awareness campaigns and collaboration between tech companies, law enforcement, and the public are crucial to combat the evolving threat of AI-powered cybercrime.

FAQs:

Q: Is ChatGPT inherently dangerous?

A: No, ChatGPT itself is not inherently dangerous. Its capabilities can be used for both positive and negative purposes, depending on the intentions of the user.

Q: How can I protect myself from ChatGPT-related cybercrime?

A: Maintain vigilance against phishing attempts, exercise caution when downloading software or clicking on links, and use strong passwords and multi-factor authentication. Stay informed about evolving cybercrime trends and update your software regularly.

Q: What can be done to prevent the misuse of LLMs?

A: Responsible development practices, including robust security measures and ethical guidelines, are crucial. Additionally, promoting public awareness about the potential risks of AI and fostering collaboration between stakeholders can help mitigate the threat.